Building a queue in Mojo

Published:

The Mojo programming language is officially open source, so what better opportunity to dig around and see what it can do? I decided on a simple graph search problem, Advent of Code Day 12, 2022.

The input is a string of the form

Sabqponm

abcryxxl

accszExk

acctuvwj

abdefghi

and you have to find the shortest path from the S node to the E node, but you are only allowed to step to an adjacent node that is at most 1 “higher” than your current position, where a has height 1, b has height 2, and so on. To solve this, I decided to implement a basic breadth-first search.

To do BFS, you need a queue data structure, which Mojo currently lacks, so my first goal was to implement that. I began by cannibalizing the List data type, which is a dynamically resizing array-like structure already implemented in the Mojo standard library. My idea for the Queue data type was to store the queue as an array, dynamically resize it like a List, but also track the indices of the first and last elements. The added complexity (compared to a List) is that you pop elements from the front of a queue, but append them to the back. So not only is your array growing and shrinking as you push and pop, the actual location of the values is moving around in the array. However, the data will always be contiguous, i.e., every element between the first and last element of the queue is properly within the queue.

The Queue data type

We define the Queue data type as follows:

struct Queue[T: CollectionElement](Movable, Sized):

var data: AnyPointer[T]

var size: Int

var capacity: Int

var left: Int

var right: Int

The data, size, and capacity fields are all directly taken from the List type. They correspond to, respectively, a heap pointer indicating the start of an array, the number of elements in the array, and the maximum number of elements in the array. The left and right fields track the indices of the first and final elements.

The __init__ method simply zeroes out these fields. I also overloaded the __init__ method to allow for a pre-defined capacity. This will be useful later on.

fn __init__(inout self):

self.data = AnyPointer[T]()

self.size = 0

self.capacity = 0

self.left = 0

self.right = 0

fn __init__(inout self, *, capacity: Int):

self.data = AnyPointer[T].alloc(capacity)

self.size = 0

self.capacity = capacity

self.left = 0

self.right = 0

For dynamic resizing, the key method is _realloc, which reallocates more (or less) memory as the capacity of the array changes. The function is pretty straightforward: the self.size field knows the number of elements in the queue, and self.left defines the offset of the first element. We copy all elements starting from this offset into the new array, change the self.data field to point to the new array, and reset the capacity, left index, and right index.

fn _realloc(inout self, new_capacity: Int):

var new_data = AnyPointer[T].alloc(new_capacity)

for i in range(self.size):

# Copy the data between the left and right pointers

(new_data + i).emplace_value((self.data + self.left + i).take_value())

# Reset the left and right pointers

self.left = 0

self.right = self.size

if self.data:

self.data.free()

self.data = new_data

self.capacity = new_capacity

With _realloc defined, the push and pop operations are easy to implement. As we push elements onto the array, we may encounter the final allocated address. If this occurs, we reallocate with double the memory (in order to preserve O(1) amortized complexity). A push operation needs to increase both the size field (because the queue has grown) and the right field (because the position of the final element has increased).

It’s important to note that we check whether the right index has exceeded the capacity, rather than checking if the size has exceeded the capacity. This is because, with our implementation, the size could be fairly small even when the right index reaches the end of allocated memory.

fn push_back(inout self, owned value: T):

if self.right >= self.capacity:

# Resize the array if we run out of space

self._realloc(_max(1, self.capacity * 2))

(self.data + self.right).emplace_value(value ^)

self.size += 1

self.right += 1

The pop operation is even simpler. We take the value from the front of the array (whose value is stored at the offset given by the left index) and return that. We also decrease the size value (because an element has left the array) and increment the left value (because the start of the array has moved up). I also copied the reallocation logic from the Mojo List type that will halve the capacity when the array gets very small. This isn’t necessary for the Advent of Code problem, but is a nice way to keep memory usage low.

fn pop_front(inout self) -> T:

var ret_val = (self.data + self.left).take_value()

self.size -= 1

self.left += 1

if self.size * 4 < self.capacity:

# Resize the array if we're using a ton of space

if self.capacity > 1:

self._realloc(self.capacity // 2)

return ret_val ^

That’s actually it! I included some boilerplate methods too, but there’s nothing fancy there. The full source code can be found in the repo.

Solving the problem

Recall that the AoC problem has us traversing a rectangular graph with ASCII characters at each coordinate. I wrote a few convenience functions to help manage this traversal. The height function returns an Int value equal to a character’s numerical ASCII value, with the exception of S and E which are specified to be the same as a and z, respectively, by the problem.

@always_inline

fn height(c: String) -> Int:

if c == "S":

return ord("a")

if c == "E":

return ord("z")

return ord(c)

I also wanted a Point type that simply stores the row and column coordinates of a point in the graph. I needed a special type for this for a few reasons:

- I needed something hashable, because BFS requires us to track “visited” nodes of the graph, and we want O(1) lookup for that. This rules out the

Tuple[Int, Int]type, which doesn’t have__hash__implement (yet?) - I had very weird issues using a

List[List[Bool]]for this. This should be an easier implementation, where we just initialize a grid ofFalsevalues of the same shape as our graph, then flip them toTruewhen we visit. For reasons I do not remotely understand (and am unable to give a small working example of), this didn’t always work. But it did work sometimes. I’m not sure what the deal is, to be honest.

The good news: it’s easy to create our own Point type in Mojo. Mine implements the KeyElement trait so that it can be used as a key in dicts, and an element in collections, etc. The only surprise should be the __hash__ implementation. My original implementation for __hash__ was to xor the hashes of the two integer coordinates, like

fn naive_hash(p: Point) -> Int:

return hash(point.r) ^ hash(point.c)

For reasons I don’t understand, this won’t work. This should work; it’s basically the implementation of hash for Set in Mojo stdlib:

fn __hash__(self) -> Int:

var hash_value = 0

# Hash combination needs to be commutative so iteration order

# doesn't impact the hash value.

for e in self:

hash_value ^= hash(e[])

return hash_value

When I tried this naive implementation, however, I got frequent segmentation faults when trying to add a Point to a set. I figure this is some weird allocation issue with the Dict type (that Set relies on), so I trimmed down the maximum value this hash could produce by computing it modulo 10,000. This seems to avoid the seg faults, though it measurably slows down program execution. So here’s the entire Point implementation:

@value

struct Point(KeyElement):

var r: Int

var c: Int

fn __hash__(self) -> Int:

return (hash(self.r) ^ hash(self.c)) % 10_000 # ??

fn __eq__(self, other: Self) -> Bool:

return self.r == other.r and self.c == other.c

fn __ne__(self, other: Self) -> Bool:

return not self.__eq__(other)

With the Point type in hand, we can create some convenience functions for finding neighbors. The first is a pretty typical one that determines the directions we might be able step based on the grid boundaries. This is something you might inline with a comprehension in Python, but Mojo doesn’t have that (yet).

The get_neighbor_cords function

fn get_neighbor_coords(p: Point, nrows: Int, ncols: Int) -> List[Point]:

var neighbors = List[Point]()

if p.r > 0:

neighbors.append(Point(p.r - 1, p.c))

if p.c > 0:

neighbors.append(Point(p.r, p.c - 1))

if p.r < nrows - 1:

neighbors.append(Point(p.r + 1, p.c))

if p.c < ncols - 1:

neighbors.append(Point(p.r, p.c + 1))

return neighbors

The valid_neighbors function produces the list of neighbors that we can actually move to based on the rule that the height of our location can increase by at most 1.

fn valid_neighbors(p: Point, graph: List[String]) -> List[Point]:

var valid_neighbors = List[Point]()

var height_here = height(graph[p.r][p.c])

var neighbors = get_neighbor_coords(p, nrows=len(graph), ncols=len(graph[0]))

for i in range(len(neighbors)):

var neighbor = neighbors[i]

var there = graph[neighbor.r][neighbor.c]

if height_here >= height(there) - 1:

valid_neighbors.append(neighbor)

return valid_neighbors

Our BFS implementation is now extremely easy. It’s basically Python at this point.

fn bfs(graph: List[String], start: Point, end: Point) -> Int:

var enqueued = Set[Point]() # tracks nodes have been enqueued

var q = Queue[Point]() # the queue

var dist = 1 << 31 # initialize to some huge number to make minimum computations easier

var level = 0

q.push_back(start) # enqueue the start

enqueued.add(start)

while len(q) > 0:

var level_size = len(q)

while level_size > 0:

var u = q.pop_front()

if u == end:

# early return if we've found the end

return level

var neighbors = valid_neighbors(u, graph)

for i in range(len(neighbors)):

# iterate over the neighbors

var neighbor = neighbors[i]

if neighbor neighbor not in enqueued:

# enqueue anything new

q.push_back(neighbor)

enqueued.add(neighbor)

level_size -= 1

level += 1

# we only get here if we didn't find the end

return dist

Although “sugar” is explicitly not a development priority for Mojo, I found that it was very easy to do a lot of the operations needed to solve the AoC problem. For example, the start of my main function is very nearly valid Python

fn main() raises:

var contents: String

with open("src/input.txt", "r") as file:

contents = file.read()

var lines = contents.strip().split("\n")

The rest of the main function is just finding the start and end points, then running BFS on them appropriately.

Sharp edges

There’s a lot to like about Mojo, but complaining is more fun than praising. Here’s what caused me pain during this process.

1. ListLiteral is different from List

I think this is some kind of compile-time check, but it’s super annoying to try to test your code on some literal input only to be told that you have the wrong data type. For example, the following code gives an error:

fn test_list(x: List[Int]) -> Int:

var acc = 0

for v in x:

acc += v[]

return acc

fn main():

var x = [1,2,3]

print(test_list(x)) # invalid call to 'test_list': argument #0 cannot be converted from 'ListLiteral[Int, Int, Int]' to 'List[Int]'

2. Iteration returns a Reference

I admit this is a weak complaint, but the List.__iter__ returns something of type Reference, and the way you access the value of that reference is by appending []. This is weird. For example,

fn test_iter(my_list: List[Int]):

for x in my_list:

print(x) # error

print(x.value) # error (x.value has type !lit.ref<_stdlib::_builtin::_int::_Int, imm x>)

print(x[]) # this one works

Is this idiomatic? Will it always be? I’m not sure. I felt more comfortable iterating over the length of the List and grabbing the value at each index, e.g.,

fn test_iter(my_list: List[Int]):

for i in range(len(my_list)):

var x = my_list[i]

print(x)

3. Unsafe code

At no point did Mojo prevent me from mucking around with pointers. As I tested my Queue implementation, I got plenty of segmentation faults caused by bad pointer arithmetic. This is obviously not the long-term goal for a language with a borrow checker. Rust, for example, requires you to wrap that kind of code in an unsafe block.

Notably, a lot of the unsafe behavior is actually contained in the standard library. It’s the Dict type that caused the seg faults with my home-grown __hash__ method. The List function has no bounds-checking for its pop method. (Amusingly, the Set type does allow its pop method to raise an exception, so users are required to wrap it in a try/except block.) I don’t know what the long-term goal is here. Raising an exception is “Pythonic,” but returning an Option is what Rust does, and might be preferred by most users.

4. The Tuple interface is insane

Suppose I have a tuple like this:

var x: Tuple[Int, Int] = (10, 20) # type annotation is optional

How do you access its elements? In Python you would use x[0] and x[1]. In Rust, you use x.0 and x.1. In Mojo? x.get[0, Int]() and x.get[1, Int]().

Let’s unpack this. The reason tuple element access is different from array access is that tuples can have different types for their elements. For example,

var y: Tuple[Int, Float] = (10, 20.0)

This means that random access to a tuple is pretty much impossible in a statically typed language. Consequently, there’s a compile-time check to see whether the access is correct. That’s why in Rust, trying to access something out of bounds returns a compiler error. (Try printing x.2 in the above example. You get an error “no field 2 on type ({integer}, {integer}).)

Since Mojo currently shoves all of its compile-time computations into the square-bracketed arguments on functions, it makes some amount of sense to put the get arguments there. Presumably the Int argument is required because there’s no value inference for compile-time parameters. Of course, the value is inferrable because it’s just the type of the corresponding element. What this all adds up to, though, is an interface that is comically verbose for a very common operation.

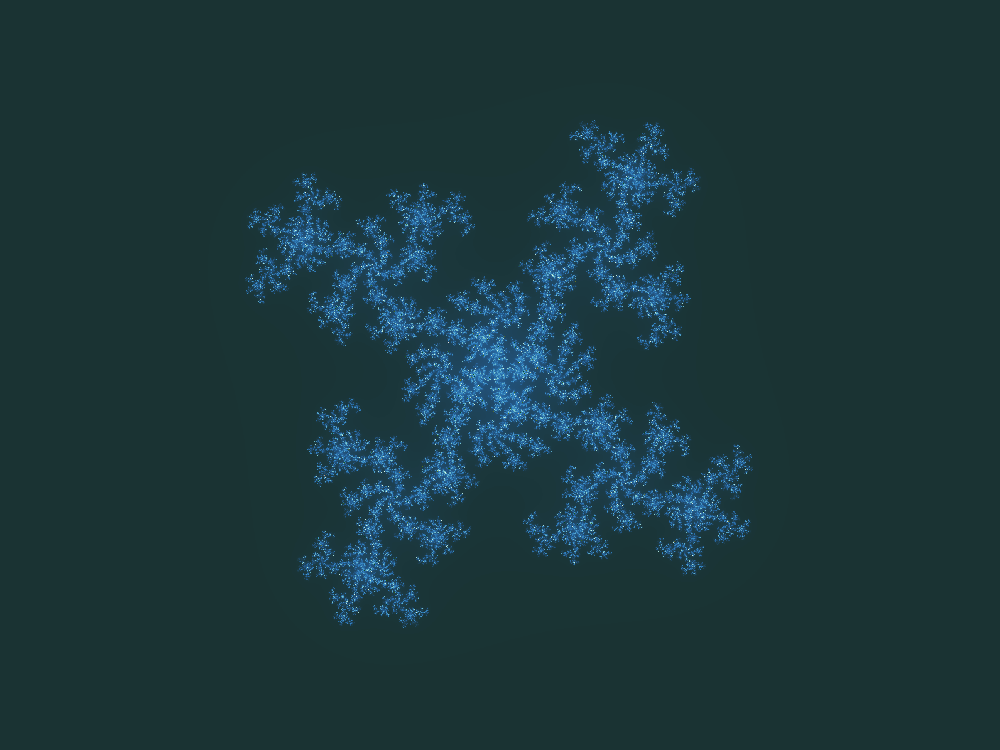

Image: the Julia set for $f(z) = z^4 + 0.6 + 0.55i$, which the author could not elegantly work into this post.

Image: the Julia set for $f(z) = z^4 + 0.6 + 0.55i$, which the author could not elegantly work into this post.